Navigating Changes

Experiments as Repositories

Experiments as repositories is implemented as a portal core service under the reconciler architecture. The Git Core Service basically does the following things.

- Observes when a new experiment is created through an etcd watcher.

- Creates a git repository in a K8s persistent volume using the excellent go-git library.

This is accomplished through the reconciler. The Git Core Service is also responsible for observing new commits that come into the repository and creating observable etcd events for other reconcilers to pick up. The primary example of this is when a new commit is pushed the Model Core Service needs to observe that a new revision is available and compile that revision. The Git Core Service accomplishes this by installing a post-receive-hook in each Git repository it creates. On each commit, git will run this hook, which places a new revision in etcd that can be observed via notification by others.

The Git service as a whole is made available to users through a K8s ingress object that is put in place by the installer. The Core Git Service runs an HTTP service that operates on HTTP Basic Authentication as supported by Git clients. This allows tokens to be provided as user credentials in the same way GitHub and GitLab support client requests such as

git clone https://<token>@git.mergetb.net/<project>/<experiment>

When the token is received by the Git Core Service, it validates the token with the internal Ory identity infrastructure and decides either to allow or deny the request. Tokens are available to users through the new Merge command line tool

mrg whoami -t

Leveraging K8s Ingress

The semantics of K8s ingress are delightfully simple. Define an external domain to listen on and plumb that listener to some service inside K8s. The installer is responsible for setting up Igress objects. Currently there are 4 Portal Ingress objects.

- api.mergetb.net: Forwards REST API traffic to the Portal apiserver.

- grpc.mergetb.net: Forwards gRPC API traffic to the Portal apiserver.

- git.mergetb.net: Forwards Git HTTPS traffic to the Portal Core Git Service.

- auth.mergetb.net: Forwards the public Ory APIs to the Portal’s internal identity infrastructure.

Because this obviates the need for an external HA-Proxy instance, this means we can completely install and run a portal on a single VM. I’m currently doing this using OKD’s Code Ready Containers.

note: OKD ingress is not completely working for reencrypt routes. Reencrypt routes are nice because they let admins manage certificates at the edge (Ingress) for replicated services, but still maintain encryption within the K8s cluster. I have a PR open which addresses the issues I came across.

Single Integrated Portal API

The Merge Portal as a whole now has 1 comprehensive API. In the gRPC API definition you will find many of the core service APIs organized as gRPC services, such as workspace. Also notice that in every RPC call definition, there are HTTP options specified. This is how we tell the gRPC-Gateway generator to generate corresponding REST API code for us. This topo level API object (which is poorly named xp.proto at the moment) only contains the form and structure of the API in terms of RPC calls. The data structure definitions are captured in a set of supporting protobuf definitions organized by service.

This set of protobufs is compiled in 2 phases.

- gRPC compilation generates the gRPC client and server code.

- gRPC-gateway compilation: generates the REST server code.

Implementing the gRPC service is business as usual and exactly the same as we’ve been doing for all of our gRPC services up to this point.

Implementing the REST service using the generated gateway code is very similar to the gRPC flow.

A New Merge CLI App

The availability of a public gRPC API dramatically reduces the complexity of implementing the Merge CLI application. We can now directly leverage gRPC generated client code and data structures directly in the implementation of this CLI application. This code has been started in the ry-v1 branch.

Scalable Object Storage

We are now using MinIO to store object types that have the potential to be large. For the portal this includes

- Compiled Experiment XIR

- Realizations

- Facility XIR

- Views

MinIO is deployed by the Portal Installer as a K8s pod and service. A MinIO client is available from the Portal Storage Library that uses access keys generated by the Portal Installer and provided to pods through plumbed environment variables.

Here are a few examples of MinIO usage in my working branch of the Portal.

Reconciler Architecture

The reconciler architecture as implemented in the Merge portal is the idea that

- The apiserver defines what the aggregate target state of the Portal is by updating Etcd and to a certain extent MinIO with target states in response to API calls.

- A collection of services reacts to target state updates and drives the actual state of underlying systems to the target state.

- When a service starts up, it observes the current state of the elements it presides over, reads the target state, and drives any mismatch toward the target. Then it goes into reactive mode as described by (2)

Portal Services that currently implement the reconciler architecture are

-

git

- Observes: Experiment create/delete

- Drives: Git repository create/delete

- Notifies: Git push events

-

model

- Observes: Git push events

- Drives: Model compilation and XIR data management

- Notifies: Experiment compilation events

-

realize

- Observes: Realization requests

- Drives: Model embedding and resource allocation

- Notifies: Realization completion events

Portal services that still need to be implemented are

- xdc

- Observes: XDC requests

- Drives: K8s XDC pods and services

- Notifies: XDC availability

- mergefs

- Observes: Experiment and Project create/delete

- Drives: Mergefs users and groups

- Notifies: Mergefs user/project readiness

- credential manager

- Observes: Experiment and Project create/delete

- Drives: SSH key provisioning

- Notifies: SSH key availability

- materialization

- Observes: Materialization requests

- Drives: Multi-site materializations and route reflectors

- Notifies: Materialization state

Protocol Buffers based XIR

In v0.9 there was a generalized XIR model that both experiment and facilities crammed into. In v1 this model is flipped on it’s head, we start with well defined experiment and facility models, and provide the ability to lift those models into a generalized network representation. The following represents the model graphically.

Here the generalized network model is composed of

- Devices

- Interfaces that belong to devices.

- Edges that belong to connections that can plug into interfaces.

- Connections that connect devices through interfaces and edges.

Experiment network models are composed of

- Nodes

- Sockets that belong to nodes

- Endpoints that belong to links and plug into sockets

- Connections that connect nodes through sockets and endpoints

Resource network models are composed of

- Resources

- Ports that belong to resources

- Connectors that belong to cables and plug into ports

- Cables that connect resources through ports and connectors

Physical network models are composed of

- Phyos (physical objects)

- Variables that belong to phyos

- Couplings that belong to bonds and attach to variables

- Bonds that connect phyos through variables and complings

Mechanically, the XIR data objects are defined in the core.proto protobuf file. And there are libraries surrounding these generated data structures for Go and Python. At the current time the Go library is geared toward describing testbed facilities and the python library toward describing experiments.

Virtualization Support

The initial cut of virtualization support is up and working using Minimega from Sandia. Minimega is a great technology, however, it’s not designed to operate as a long lived daemon. It’s designed to spin up and tear down with experiments. This is creating friction points in trying to implement a reliable virtualization service. Discussion revolving around the general implementation of virtualization can be found at the following link

The basic design of the current Minimega systems is to install Minimega on each infrapod server as a head node. Head nodes do not actually spawn VMs, but expose the Minimega CLI API (which is another friction point, something like gRPC would be nice) to the Cogs to control machines that are imaged with a hypervisor/Minimega image. In this way Rex can use the minimega API to manage mesh state with other Minimega nodes over the testbed management network and deploy virtual machines. If a hypervisor or VM process crashes, it’s entirely up to the Cogs to detect and reconstitute the hypervisor of VM state once it becomes possible to do so again.

We have our own branch of Minimega. The primary modification in that repo is support for vanilla Linux bridging to avoid having to use OpenVSwitch.

I’m going to try my best to stick with Minimega, but if turns out not be be a great fit, not because Minimega is not great, just that we are covering different use cases and this may be a square peg for a round hole, then I’ll implement a simple QEMU/KVM reconciler deamon that integrates with the Cogs etcd.

Experiment Mass Storage

Rally is on it’s way to being integrated with my v1 branches thanks to the efforts of @lincoln . I’ve not had enough hands on time with Rally yet to provide any meaningful development guidance here.

Experiment Orchestration

Coming soon!

Certificate Based SSH

This is on the TODO list, it should take the form of a reconciler in the portal as outlined in the Reconciler Architecture section. Based on my reading of how user SSH certificates are commonly deployed, there are two paths here. Some references here

- Issue short lived (1 day) keys from the API to users in exchange for an authentication token + short-lived/single use certs to tools through JWKs.

- Create long lived (permanent but revocable) keys when users are created from a reconciler.

For host SSH certificates we have two targets we need to provision certs for

- XDCs

- Testbed nodes

XDC host certificates should be generated by the XDC reconciler. The testbed node certificates should be generated by the materialization reconciler.

Self Contained Authentication

Self contained authentication has been in the works for quite some time. Thanks to the efforts of @glawler. He’re I’ll outline how things are currently integrated.

The Portal Installer is responsible for provisioning the Ory Identity Infrastructure inside the Portal K8s cluster.

When a request comes into the portal, the vast majority of the RPC service endpoints implement a authentication check that extracts a token from the gRPC context metadata, and checks that token with the internal Ory Kratos identity server.

Login for API clients is now build directly into the API. Login for web clients should use the Ory API directly that is exposed by a dedicated Portal Ingress as described in the Leveraging K8s Ingress section..

We are using Ory Kratos v0.5, which has excellent documentation.

At the current time, we have only integrated Ory Kratos and not Ory Hydra. Which means we cannot support OAuth delegation flows from external entities such as GitHub and GitLab. I view this as absolutely critical to support, but it probably won’t make it into the first v1 release. We do need to do some study to see if we can implement full OAuth support within the v1 release without breaking the current Kratos mechanisms.

Self Contained Registry

We essentially got this for free by using OKD. OKD comes with a registry built in out of the box that comes with integrated OAuth token flows for managing the container registry as well as integration with K8s RBAC for segregating parts of the registry in different K8s namespaces (what OKD calls projects) to different clients. This is a really nice system and what the Portal Installer uses to push containers into the Portal at install time.

The idea here is also for Cogs infrapods to pull containers from this Portal based registry for walled garden environments.

Self Contained Installers

The installer for the Portal is a thing and it works

it assumes that OKD/K8s has already been setup and requires that the user provides kubectl credentials and a basic configuration that describes how the portal should be set up and provides specific keys for things like registry access.

On install the Portal installer will also dynamicall generate some configuration, this will be located in the .conf folder within the installers working directory. The generatd config is in YAML and looks like the following.

auth:

cookiesecret: BpqacfbmjiQE93NW146ZYA2CP50v8Gt7

postgrespw: CX4Oh5t0D7imZ381VcrFK2GdHoJs9p6j

minio:

access: gnnRpkm9RNvTNqxuUMCofimQCouPHKFN

secret: XErHLFaf4uta6q48um6aybxGUYmrvgdy

oauth:

salt: 6BIyTWvd18Lh5s4mOSEGwb70Mn92P3Nj

systemsecret: NQpSsxMH4Tv1g82hoi9F76DmlJKP35O0

merge:

opspw: 4c9k8TUv2fj01Rq6PH5sdY7CzVJQibN3

As you can see this is entirely generated passwords. These can be changed later, but it was deemed a better staring point than default passwords.

Organizations

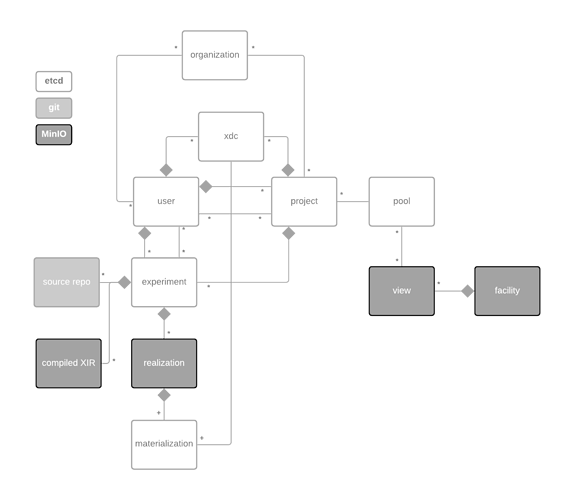

This has yet to be implemented, how it will fit into the overall picture looks like this.

Cyber Physical Systems Support

CPS support has the following

- Expression in Merge MX which includes the ability to

- Representation in XIR covered brefily in the XIR section

- Translation of a physical XIR model into an executable system of differential-algebraic equations with a viable set of initial conditions (simd).

- User control interfaces for physics simulation (simctld).

- Physical system simulation (cypress)